Machine Learning in Weather and Climate

The European Centre for Medium-Range Weather Forecasts (ECMWF) offers a massive open online course (MOOC) called "Machine Learning in Weather and Climate." This course provides an overview of the use of machine learning techniques in the field of weather and climate science. It covers a range of topics, including the basics of machine learning algorithms, applications of machine learning to weather and climate data, and best practices for the use of machine learning in these fields. Whether you are a scientist, a student, or simply interested in the application of machine learning to the study of weather and climate, this MOOC is a valuable resource for gaining a comprehensive understanding of this rapidly growing area of study.

The following is a summary and documenation of what I learned in the MOOC :)

Link to the MOOC: https://lms.ecmwf.int/?redirect=0

Machine Learning

Keywords:

Remote Sensing

ECMWF

Weather and Climate

Observations

Forecast Model

Data Assimilation

Tier 1 Modul 1: Introduction to ML in Weather & Climate

Why ML for weather forecast:

Machine learning is used for weather forecasting to improve the accuracy and efficiency of predictions by detecting patterns and relationships in large amounts of data. The use of machine learning algorithms can lead to better simulation of real-world weather phenomena and help detect events in real-time. With powerful optimizing methods and access to large amounts of training data, machine learning can help improve the value of forecasts, identify problems before they occur, and help predict the specific consequences of climate change. The models can also be updated when new data is available and different modalities can be incorporated.

How does it change the state-of-the-art:

Machine learning in weather forecasting has changed the state of the art by enabling the integration of data from various sources and improving the accuracy of forecasts. With new resources provided by technology, machine learning can access and use all available information. The use of machine learning techniques, such as image classification and data simulation, has increased the efficacy of forecasts. Machine learning has also opened up new possibilities for exploring the weather data and asking new questions by merging physical domain knowledge with highly statistical tools like deep learning. The ability to process large amounts of data automatically has reduced the time needed for forecasts and analysis, and has led to the development of better models.

There are several types of machine learning, each with a different approach to learning from data:

-

Supervised Learning: In this type of machine learning, the algorithm is provided with data and answers to learn the rules.

-

Unsupervised Learning: This type of machine learning is used for large datasets without labels. The aim is to detect the internal structure of the data and group similar patterns or routines.

-

Semi-supervised Learning: This type of machine learning is used for very large datasets with partial labels. It combines the benefits of both supervised and unsupervised learning. An example application is cloud detection in satellite images.

-

Reinforcement Learning: In this type of machine learning, the rules are defined by the algorithm, and it learns through trial and error to find the optimal solution.

Each of these approaches has its own advantages and limitations and is suited for different types of problems and datasets.

Why consider Machine Learning for weather and climate modelling:

Machine learning is a valuable tool for weather and climate modeling as it can help improve our understanding of the earth system. The increasing amount of data available, combined with the ability of machine learning to be applied in various ways, makes it a useful tool for interdisciplinary research projects. Machine learning can improve models by fusing information from different data sources, detecting features, correcting biases, and improving the quality control of observations. Additionally, machine learning can speed up simulations, reduce energy consumption, and optimize data workflows. Machine learning can also help connect communities by providing predictions for various industries such as health, energy, transport, pollution, and extremes. There are several companies providing software for machine learning, including TensorFlow, Keras, and PyTorch.

Challenges for Machine Learning in weather and climate:

The challenges for using machine learning in weather and climate include the large amount of data that must be processed every day, which is estimated to be 800 million observations to create a snapshot of Earth's weather. Another challenge is the lack of data to capture a good timeline, which can make it difficult to train machine learning models effectively. Additionally, there is a need for communication between the Fortran and Python programming languages, which can present a technical challenge for implementing machine learning in the field of weather and climate.

Tier 1 Modul 2: Observations

The challenges of using observations in weather and climate modeling include errors in the measurement process, including both random and systematic errors. To effectively use observations, it is necessary to develop a physical model of the measurement process and to have a good understanding of the observation operator, which maps the model to the observations and the reality. In-situ observations, such as measurements of atmospheric temperature and pressure, are limited in availability, particularly in areas like the oceans or Africa, which is why satellite observations are important. The use of observations also presents a challenge due to the wide variety of formats and metadata of the observations used, which can make it difficult to effectively integrate the data into models.

Machine learning can help improve observations in weather and climate by offering advantages such as speed, the ability to replace existing physical models with a faster alternative, the ability to learn new predictive relationships, correcting systematic errors (biases), and increasing speed. Machine learning can also skip physical models and work directly with observation data. However, the main challenge is that the bias correction model must be retrained continually as new observations come in as things change.

The main difficulties of applying Machine Learning for observation processing are keeping up with changing conditions and extrapolating outside the training dataset. Another challenge is the availability of training data, especially for new physical applications and replacing existing components for bias correction.

The role of Machine Learning in satellite data acquisition and use in weather and climate applications:

AI and ML are used at various stages of the EUMETSAT cycle for anomaly detection in the control center and for turning satellite measurements into atmospheric and geophysical information. ML is used to prepare the data and make it more accessible. The future of EUMETSAT and Copernicus satellites promises even better data and higher resolution monitoring of the atmosphere. This can lead to improved safety through earlier detection of small disturbances, as demonstrated during Hurricane Sandy.

Semantic annotation, benchmark data, unsupervised learning:

Semantic annotation is the process of converting image information into a simplified form for better processing. This involves reducing the complexity of the information by converting each pixel from real numbers into integer numbers. The goal is to assign a single semantic class to each pixel, which represents the material at the surface. In some cases, multiple labels may be assigned to a single patch of an image. Benchmarking is used to compare algorithms when developing solutions. Good benchmarks have large datasets with spatially separated train and test sets, multiple tasks, and a public leader board. There are several benchmark datasets available, such as BigEarthNet, Sen12MS, and DeepGlobe. These datasets provide image pairs with labels for tasks such as land cover mapping, road detection, and building detection.

Semantic annotation involves converting multidimensional information into a single value (semantic class) corresponding to the material at the surface. Benchmarking helps in comparing algorithms for image processing, by providing large datasets with spatially separated train and test sets, and public leader boards. Unsupervised learning is used when no labels are available and involves processing images using spectral similarities between samples only, like clustering to find "natural" frontiers between the classes. The clusters then need to be manually assigned to classes. Unsupervised methods are used when acquiring labels is unfeasible or very costly.

Tier 1 Modul 3: Forecast Model

The state of the art in weather and climate modeling involves the use of numerical methods and supercomputers to predict the weather a couple of days ahead and the summer a month ahead. The ground is described by grid points and not continuously, so discretized equations are used to describe the interaction of different variables. The resolution of the model is a crucial factor, and small features like clouds are represented through sub-grid-scale parametrization schemes that use information on the grid such as temperature and humidity. The main ingredients of weather and climate models are equations, grid-points, discretization, sub-grid scale parametrization schemes, and supercomputers.

Forecasting with Machine Learning:

Forecasting models are the heart of the system and require a lot of computational resources to run. Machine learning approaches in forecasting can be divided into two categories: acceleration and model improvement. Acceleration aims to reduce the time required to deliver a forecast, while model improvement aims to increase the accuracy of the forecast model through learning. ML can be used to learn new components, correct model drift, or bias correction. The best results of using ML in weather forecasting so far have been in the nowcasting task, although it is a very challenging task.

The importance of benchmark datasets in data research:

Benchmark datasets provide a way to compare different models, set clear goals for research, and provide accessible data and code. ImageNet is an example of a successful benchmark dataset that transformed the field of machine learning. In the field of Global Medium Range Weather Forecast, the ground truth data is obtained from ERA5, a reanalysis dataset produced by ECMWF. However, the raw data is too large for many machine learning applications, so WeatherBench provides data that is regridded to coarser resolutions and the goal metrics are evaluated at the coarsest resolution of 5.625 degrees.

There are different approaches to creating data-driven forecasts in weather forecasting, including direct vs iterative and purely data-driven vs physics-guided. Convolutional Neural Networks (CNNs) are popular for image-based problems, including weather forecasting, as they are efficient in filtering noise and extracting key features. All the machine learning models on the WeatherBench leaderboard use CNNs and have been successful for weather forecasting. There are also Graph Neural Networks (GNNs), which are an optimizable transformation of graph attributes preserving graph symmetries. Keisler (2022) used GNNs with success for weather forecasting.

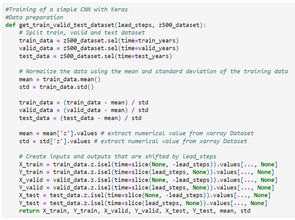

In the JupyterNotebook exercise I learned to build a simple neural network on the WeatherBench dataset:

Tier 1 Modul 4: Data Assimilation

ECMWF aims to develop and produce global weather forecasts at all ranges, from hours to seasons. This is a problem of initial conditions, where the state of the Earth system is calculated at t = 0h and used to predict the state at for example t = +240h. The data assimilation process at ECMWF involves combining the model forecast (with errors) with observations (with errors) to produce an analysis with smaller errors. The data assimilation cycle runs every 12 hours, blending information from the previous analysis and the latest observations using the 4D-Var algorithm. Although the ECMWF IFS model is sophisticated, uncertainties still exist in both the model forecasts and observations. To represent these uncertainties, ECMWF uses ensemble data assimilation, running 50 member systems that differ because they see randomly perturbed observations and model forecasts. The spread of the ensemble members indicates the uncertainties of the analysis.

Similarities between data assimilation and machine learning:

Data assimilation and machine learning have similarities in that Deep Learning techniques can be integrated into 4D-Var, a data assimilation method. 4D-Var can be extended to estimate both the state of the system and the model describing the system evolution. The integration of Deep Learning into 4D-Var can range from physically based models to fully statistical models where Neural Networks completely replace the physically-based model. In one configuration, NNs are used to correct model errors during model time stepping. To train the NN, a database of cases can be created using a higher resolution version of the model or direct observations of the system. However, the limitations of using direct observations, such as imperfection and limited spatial coverage, must be considered.

Machine Learning tools and ideas are widely accepted in Earth system monitoring and prediction and can be applied to various areas such as model discovery, model emulation, observation quality control, etc. Deep Learning, a version of Machine Learning, has similarities with the ECMWF weak constraint 4D-Var system and is being introduced to improve the analyses and the model. The development of hybrid data assimilation-machine learning systems is a promising example of the role ML can play in enhancing NWP and Climate Prediction.

Model error estimation and correction with Neural Networks is a rapidly growing field in Earth system monitoring and prediction. The weak constraint 4D-Var system and Deep Learning share many aspects and have now been integrated in the ECMWF system to improve analysis and forecasts. The Neural Network 4D-Var is a machine learning algorithm that produces a model of model error, which can be applied to forecasts at any forecast range. The application of NN 4D-Var has shown to improve analysis and forecast skill and reduce errors. The applications of ML techniques in this field are varied and new ones are regularly appearing.

What is the main conceptual difference between traditional data assimilation and standard Machine Learning applications?

Data assimilation aims to estimate the state of the system, Machine Learning aims to build a model of how the system evolves.

Tier 1 Modul 5: Post-Processing

This module focuses on probabilistic weather forecasting using ensemble simulations from numerical weather prediction models. Ensemble forecasts consist of multiple simulations with perturbed initial conditions and model physics, resulting in a collection of possible scenarios for future weather developments. These forecasts are probabilistic and allow for quantifying the inherent forecast uncertainty, making them more advantageous than simple, deterministic predictions. However, ensemble forecasts often show systematic errors and require post-processing to produce a corrected probabilistic forecast. The ultimate goal of probabilistic forecasting should be to maximize the sharpness of the predictive distribution, given that the forecast is calibrated. Calibration refers to the statistical compatibility between the forecast and the observation, which can be empirically verified using verification rank histograms.

Proper scoring rules are useful evaluation metrics for assessing the quality of probabilistic forecasts. They assign a numerical score to a forecast distribution and observation, ensuring that the best possible score would be achieved by the true distribution of the observation. Proper scoring rules are valuable for simultaneously assessing calibration and sharpness, and for comparing the overall forecast quality of competing methods. An example of a proper scoring rule is the Continuous Ranked Probability Score (CRPS), which is commonly used in meteorological applications. The CRPS can be computed in a closed analytical form for both parametric distributions and ensemble forecasts, and it is reported in the same unit as the outcome.

Ensemble weather forecasts, while improved, still have typical errors. These errors include consistent bias, where the forecast is systematically too high or too low, and an uncertainty range that tends to be too small, failing to accurately quantify forecast uncertainty.

Post-processing of ensemble forecasts involves using distributional regression models to correct systematic errors such as biases and lack of calibration by analyzing past forecast-observation pairs. In the Ensemble Model Output Statistics (EMOS) approach, the target is modeled by a parametric distribution Fθ, where the distribution parameters θ are linked to summary statistics from the ensemble predictions.

When implementing the EMOS model, there are several design choices to consider. These include selecting an appropriate parametric model Fθ for the target variable, choosing the temporal and spatial composition of training datasets for estimating model coefficients, deciding on the choice of link function g and summary statistics from the ensemble predictions X, and potentially using mixture models and forecast combinations. For parametric models, closed-form expressions of the CRPS are available for many distributions, and maximum likelihood estimation of distribution parameters is often a straightforward alternative. The standard temporal choice is a rolling window consisting of past 20-100 days, but using all available past data often has advantages despite potential changes in the NWP model. Spatially, global models composite data from all available locations and lead to stable estimation procedures, while location-specific local models use data from single stations and often result in improved performance. Alternatives include using similarity-based groups of locations. However, it can be difficult to specify the functional form of dependencies if many possible predictors are available.

Alternative post-processing approaches include Bayesian Model Averaging, which uses a mixture distribution of parametric densities associated with individual members with weights reflecting past performance, member-by-member approaches, which modify individual members to correct their biases and adjust the spread while preserving spatial, temporal, and inter-variable dependencies, quantile regression approaches that provide probabilistic forecasts in the form of a collection of predictive quantiles without requiring parametric assumptions but may require additional steps to avoid quantile crossing, and analog methods that select dates in the past where forecasts were most similar to today's forecasts and build a new ensemble from the observations at those dates, which are flexible and easy to implement without parametric assumptions but require large training datasets.

The use of station observations versus gridded re-analyses data is crucial in training and assessing post-processing methods. The relative improvement is usually greater when methods are trained and verified against station observations. Gridded data requires methods to simultaneously post-process forecasts at all locations, such as via spatial processes. Multivariate dependencies across space, time, and variables are important in many applications but are lost when post-processing is applied separately. Copula methods and statistics can restore dependencies from ensemble forecasts or past observations. Regime-dependent post-processing methods aim to account for flow-dependent errors in large-scale weather conditions. However, limitations in training sample size can make robust model parameter estimation challenging. Classical post-processing approaches have limitations in light of high-dimensional, rapidly growing datasets, and additional predictors beyond ensemble forecasts of the target variable. Flexible, data-driven modeling of nonlinear relationships between NWP inputs and forecast distribution parameters, and integration of spatial and temporal information from complex input data can be improved using advances in ML, which have become a recent research interest.

There are some limitations of traditional statistical postprocessing methods such as EMOS in correcting systematic errors of ensemble weather forecasts. One major limitation is that these models only use ensemble forecasts of the target variable as inputs, and including additional predictors is challenging due to the need to specify the functional form of the dependencies of the distribution parameters on all input predictors. This makes it difficult to include spatial or temporal information into model building and estimation. To overcome these limitations, different machine learning approaches to post-processing have been investigated, including gradient boosting and random forests. One direct extension to EMOS based on gradient boosting was proposed by Jakob Messner and co-authors in 2017. In this approach, the EMOS framework is extended by including additional predictor variables and iteratively updating their regression coefficients to improve the model fit.

An alternative approach to statistical post-processing using neural networks, which allows for learning arbitrary nonlinear relations between predictors and distribution parameters in an automated and data-driven way. The neural network is trained using the CRPS as a custom loss function for the probabilistic forecasting task. Different inputs to the neural network are indicated in different colors, including NWP ensemble predictions, station information, and an important input shown in red. The model is trained jointly for all locations but uses embeddings to make the models locally adaptive. The article also mentions other approaches, including gradient boosting and random forests, which require station-specific models to achieve good results.

Modern machine learning techniques offer unprecedented capabilities for data analysis and prediction, particularly in the field of post-processing. These methods can enable the inclusion of spatial and temporal information into model building and estimation, incorporate prior knowledge of the underlying physical processes, and allow for flexible modeling of complex and multivariate response distributions.

S2S predictions fill the gap between weather and climate forecasting and are issued more than two weeks but less than a season in advance. They are useful for various applications, such as flood control and agriculture management. Predictability at this time range is lower and comes mostly from slow-varying components of the earth system. S2S predictions focus on predicting large-scale and long-lasting meteorological events, such as heat waves, cold waves, droughts, and large-scale floodings. The forecasts are issued as weekly averages since there is little predictability in daily weather. Common forecast products include the probability of 2-metre temperature or precipitation to be above, normal, or below normal over a weekly period.

The production of S2S forecasts involves three main stages. The first stage, known as initialisation, involves the use of earth system observations and data assimilation techniques to obtain the most accurate approximation of the current state of the atmosphere and ocean. In the second stage, the ocean-atmosphere model is integrated multiple times with slight variations in initial conditions, allowing for an estimation of the forecast uncertainty.

The three stages of producing S2S forecasts are initialisation, multiple integrations, and production of forecast charts, with machine learning methods being useful in all three stages. The S2S AI Challenge focused on improving model calibration using ML methods and empirical methods, with the goal of providing the best possible prediction of 2-metre temperature and precipitation for weeks 3+4 and weeks 5+6 for a total of 52 S2S forecasts every Thursday of 2020 for all land areas. The competition was hosted by the Swiss Sata Center, and ECMWF provided access to model S2S forecasts and past forecasts for training ML methods. Open-source ML methods were required for the competition.

Out of the 49 participants, only 5 were able to produce S2S forecasts that outperformed the ECMWF benchmark. The top three teams shared a prize of 30,000 Swiss Francs from WMO. The winning team utilized a Convolutional Neural Network to improve model calibration and provide optimal weights for multi-model combination, which resulted in more skillful forecasts over areas where the ECMWF forecasts were deficient. This competition confirmed that multi-model combination is an effective way to enhance S2S forecasts. The third winning entry used a purely empirical method based on random forecast classification, which suggests that this approach can be competitive for S2S prediction. Although the skill improvement compared to the benchmark was marginal, the competition provided a test bed for new ML methods, allowing for clean comparisons of their performance for S2S prediction. A second phase of this competition may take place in a few years.

To summarize, the S2S AI Challenge showed that utilizing ML techniques can enhance subseasonal to seasonal predictions of temperature and precipitation by enhancing the calibration of model forecasts. These enhancements are expected to increase the usefulness of S2S forecasts for society.

Tier 1 Modul 6: Computing

Evolution of HPC, State of the art: With each generation of technology release, HPC systems are becoming more powerful and efficient, while machine learning algorithms and methodologies are becoming more accurate and reliable. As a result, HPC systems are now mainstream solutions that underpin a wide range of research domains and services, from weather prediction to drug discovery.

Traditional supercomputing designs were influenced by workloads dominated by modeling and simulations, which targeted highly tuned applications using a set of input parameters with results that were visualized using data stored on local disks. However, workflows are now becoming much more complex, and data analysis is exceeding the simple approach of human interpretation by reviewing outputs on a screen. Data acquisition and simulation are becoming increasingly important stages in modern workflows, which impact on the storage designs that require greater capacity and information manipulation than traditional methods. This must be taken into account when designing the next generation of HPC systems.

Data assimilation is a prime example of HPC and ML aligning, driven through the explosion of information sources. Simulations and forecasts rely on millions of observations captured on a daily basis from satellites, sensors on ground/space observation points, and other sources. Assimilating this data into a coherent source of information, combined with improved computational capabilities, allows for greater forecast fidelity. The COVID-19 pandemic had a significant impact on forecast capabilities, as there were fewer aircraft in operation, resulting in less data being available for assimilation and impacting on prediction accuracy. Therefore, data assimilation from multiple sources is crucial, from next-generation exascale workflows to training models in predictive capabilities to mitigate the impact of missing or corrupt data sources. This will compensate and complement traditional methods and influence the optimization and design of applications.

ECMWF Compute Facility: ECMWF's latest supercomputing facility, awarded to Atos in 2019, uses the "BullSequana" platform which is exascale-ready and accommodates multiple technologies in a single rack. It provides a flexible environment for traditional and ML communities, and the latest system integrates different services into a single platform, providing an increase in sustained performance by over four times the capacity of the previous service. The integration of GPUs from NVIDIA and AMD provides complementary technology to support the development of codes and scientific insight in the field of Machine Learning.

How can ML help: Supercomputers are necessary for using Earth system models to predict weather and climate. However, machine learning can reduce the required computing power for conventional modeling. There are several reasons for this:

First, machine learning algorithms are often simpler than those used in conventional modeling, making them easier to optimize on different hardware.

Second, deep learning tools often use dense linear algebra, which performs many operations per data unit, making it more efficient for modern hardware.

Third, machine learning tools can often use lower numerical precision, reducing computational costs.

Fourth, the global AI market invests trillions of dollars in supercomputing, resulting in optimized hardware for machine learning.

Fifth, software libraries like PyTorch and TensorFlow enable scientists to develop powerful machine learning tools with minimal effort.

Six, machine learning tools require more power during training but are often more efficient when applied to make predictions.

Regenerate response

ML for scalable meteorology and climate (MAELSTROM) aims to enhance the effectiveness and accuracy of machine learning (ML) tools in meteorology and climate prediction through the implementation of a co-design cycle. This approach involves the simultaneous development of both software and hardware to achieve optimal outcomes, where software enhancements and requirements are communicated to hardware developers, and hardware is customized to meet the needs of the software. Feedback is then provided to the software developers to further optimize the software to suit the new hardware. This iterative co-design process ensures the best possible results for both the software and hardware components.

ML with HPC: Modern High Performance Computing (HPC) systems consist of a combination of CPU and accelerator components. It's important to note that each of these components requires different software adaptations to achieve the highest performance. Therefore, software developers must consider these differences when designing software for HPC systems. The computational components of modern HPC systems include CPUs, GPUs (Graphical Processing Units), and AI accelerators. Additionally, the interconnection between these components is also crucial for efficient performance. To test your knowledge, we will conclude with a short quiz.

In summary, a supercomputer is a collection of compute nodes interconnected by a high-performance network. Each node is a multiprocessor with powerful processors, or CPUs, and each processor contains multiple cores capable of executing instructions. The processors used in a supercomputer typically have powerful vector units that can perform multiple floating point operations simultaneously, resulting in high FLOPS. To further increase performance, nodes may also have one or more accelerators, such as GPUs, which excel at regular calculations like matrix multiplication, making them crucial components of modern high-performance computing systems.

Taking the AMD EPYC architecture as an example, a processor of the 4th generation EPYC architecture contains multiple chips, with each chip having 4-8 cores and separate instruction and data cache memories. Additionally, each core has access to a 32 MB level-3 cache and 512-bit wide vector units, allowing for increased data-parallelism. With advanced techniques such as branch prediction, cache prefetching, and dynamic scheduling, each core can execute over 10 instructions from the same thread in parallel.

Regenerate response

To form a powerful shared memory multiprocessor, the CPU chips are connected via an I/O chip which also handles communication off-chip. By adding an additional AMD processor, an even larger shared memory multiprocessor with up to 96 cores can be created. While modern CPUs excel at processing both single-threaded and multi-threaded code with the goal of achieving low execution times, a significant portion of the chip area is dedicated to cache memory. However, some computational patterns may not require this and could benefit more from additional computational units instead. A prime example is matrix multiplication, which is fundamental to most successful deep learning models.

The GPU is characterized by its high density of simple processing cores, lower cache memory, and high bandwidth to main memory. Modern GPUs offer more choices for floating point calculation precision, including half-precision or mixed precision, which enables fast training of deep neural networks at an acceptable accuracy. Traditionally, GPUs were programmed using low-level computational kernel languages like Nvidia's CUDA. However, there are now several software libraries available that abstract this low-level programming model in favor of common computational patterns used in linear algebra, making it easier for deep learning model developers to use frameworks like PyTorch or TensorFlow without needing to go below the surface. Despite GPU architectures, performance might not be optimal if the dominant workload is training deep learning models. To address this, there has been an active development of specialized accelerators for this type of workload. Google, for instance, has already released four generations of its Tensor Processing Unit (TPU). However, TPUs are exclusive to the Google Cloud environment and cannot be purchased separately.

Three AI-processor examples are Graphcore from the UK, and two Californian companies - SambaNova (a spin-off from Stanford) and Cerebras. These processors feature architectures with simple computational units and memories structured in a mesh-like manner. Cerebras employs an innovative single-wafer technology, containing over 400,000 cores on a single chip. The programming model for each processor is unique, even for those familiar with CUDA programming, and success depends on the porting of deep learning frameworks so developers can continue using familiar Jupyter notebooks.

The fat-tree is a commonly used topology in modern supercomputers for its balanced trade-off between latency and intersection bandwidth. It is a tree structure with leaves as nodes and neighbouring nodes having low latency. The bandwidth increases closer to the root of the tree, hence the name "fat-tree."

Cloud Computing: “A model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, severs, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.”

Cloud computing is the provision of computing services over the internet, which include servers, storage, databases, software, analytics, and intelligence. It has five essential characteristics, including on-demand self-service, broad network access, resource pooling, rapid elasticity, and measured service. Additionally, it has three service models and five deployment models, providing users with flexible resources, faster innovation, and economies of scale.

Service Models:

Service Model 1: Infrastructure as a Service (IaaS) is a service model where users are provided with the capability to provision fundamental computing resources such as processing, storage, and networks. The user has control over the operating systems, storage, and deployed applications, and can run arbitrary software, including operating systems and applications. However, the user does not manage or control the underlying cloud infrastructure, except for limited control over select networking components such as host firewalls and security rules for ingress/egress traffic.

Examples: Amazon Web Services, Microsoft Azure, and Google Compute Engine

Service Model 2: Platform as a Service (PaaS): a cloud computing service model that enables users to deploy their own applications on the cloud infrastructure. Users can use programming languages, libraries, services, and tools supported by the provider to create their applications. The user has control over the deployed applications and can configure the application-hosting environment. However, they do not manage or control the underlying cloud infrastructure, such as the network, servers, operating systems, or storage.

Examples: MS Azure, AWS Lambda, and RH OpenShift

Service Model 3: Software as a Service (SaaS): is a cloud computing service that allows users to access provider-hosted applications over the internet. The applications are available on a variety of devices and can be accessed via a thin client interface or program interface. The user does not manage or control the underlying cloud infrastructure, including the network, servers, operating systems, storage, or individual application capabilities, except for limited user-specific application configuration settings.

Examples: Microsoft Office 365, Salesforce, and Google Workspace.

Service Model 4:"Anything as a Service" or simply "as Service" (XaaS): refers to a business model that offers a product or service as a subscription or utility, accessible through an API or web console. This approach provides users, whether internal or external, with a service endpoint that allows them to interact with the product or service, without having to worry about the underlying infrastructure or management of the service.

The four basic cloud deployment models are private cloud, public cloud, community cloud, and hybrid cloud. Private cloud is exclusively used by a single organization, while public cloud is available for open use by the general public. Community cloud is exclusive to a specific community of consumers with shared concerns. Hybrid cloud is a combination of two or more distinct cloud infrastructures that remain unique but are bound together for data and application portability.

Obstrack and K8:

OpenStack is a free, open standard cloud computing platform that is commonly used for public and private cloud deployments. It offers interrelated components that manage virtual servers and other resources, including computing, storage, and networking resources, throughout a data center. Users can manage these resources through various interfaces, such as a web-based dashboard or command-line tools. So key features are: Compute, Storage, and Networking

Kubernetes, also known as K8s, is an open-source container-orchestration system used for automating application deployment, scaling, and management. It is highly modular and can be deployed on any cloud platform, including public, private, or hybrid clouds. Many cloud services offer Kubernetes-based platforms as a service or infrastructure as a service. Although Kubernetes is commonly deployed on top of virtual machine-based platforms like Openstack or VMWARE, newer lightweight virtual machine technologies are emerging that do not rely on virtual machines for isolating workloads.

Cloud computing vs Desktop/Laptop: Cloud computing offers more accessibility compared to desktop/laptop usage as it can be accessed from any website browser and any system with internet connectivity. It also provides access to a greater variety of platforms and complete solutions that can be integrated with new applications. Cloud computing also offers backup and updates, ensuring that systems can be restored to their original state in case of failure. Additionally, the scalability of cloud computing resources is theoretically unlimited, providing greater productivity and scalability. Finally, cloud computing offers increased security based on international standards.

Cloud computing vs HPC: to summarize, cloud computing can be used for a wide range of tasks, including high powered computing and specialized tasks, and has more feature-rich software due to the flexibility of the user to install and integrate software packages. On the other hand, HPC is more powerful hardware-wise and tends to be used for solving complex, performance-intensive problems.

Cloud computing and ML: the cloud is an ideal tool for many machine learning (ML) use cases because it offers scalability, accessibility to virtualized resources like GPUs and FPGAs, and easy code sharing and library installation. ML applications can be trained and run close to the data via the internet, and cloud technologies like containerization and orchestration can be used for implementation. Additionally, cloud computing provides easy access to storage resources and the ML results and products can be easily shared.

The European Weather Cloud (EWC) project is a collaboration between ECMWF and EUMETSAT, aimed at bringing computation resources closer to big data in meteorology. It is a cloud-based collaboration platform for meteorological application development and operations in Europe, dedicated to supporting the National Hydrometeorological Services of the Member States of both organizations. The EWC is a community cloud that can be used for official duties and non-commercial work by Member and Cooperating States of ECMWF and EUMETSAT. There are currently 50 use cases in 18 countries, covering areas such as training, testing, and data processing.

The use cases of the European Weather Cloud project include algorithm testing, collaborative projects, experimental processing, provision of hosted services, mass processing of data, operational production, tailoring data, training, limited area modelling, climate data and reanalysis, machine learning/AI, federation between clouds, and data visualization and dissemination. These use cases involve processing and analysis of weather data, algorithm testing, collaboration, data processing, climate data record generation, data mining and AI techniques, and data visualization and dissemination to end-users.

The EWC provides direct access to the Ceph/Rados gateway through a load balancer using the Fully Qualified Domain Name storage.ecmwf.europeanweather.cloud. EWC users can also access the equivalent infrastructure at EUMETSAT over the Internet. ECMWF's production workflow includes data acquisition and pre-processing, model run and product generation, archiving in the Data Handling System (DHS), and dissemination through the European Centre's Product Datastore (ECPDS) to global data recipients. The EWC has direct access to ECMWF's archive and HPC infrastructure and can be used as a primary platform or backend for end user applications running in a Public Cloud Service Provider, depending on the requirements and nature of the cloud application.

To be continued :)

Looking forward to learn more