Detecting Urban Green Areas

In einem einwöchigen Seminar unter der Leitung von Prof. Anto Aasa tauchte ich tief in die faszinierende Welt der "Mobile & Location Based Services" ein. Das Seminar bot eine umfangreiche Vorlesung über mobile und standortbezogene Dienste. Meine Hauptaufgabe nach dem Seminar bestand darin, einen Aufsatz zum Thema "Datenschutz in Deutschland (Europa) und die Entwicklung von LBS" zu verfassen.

Für die praktische Komponente habe ich zwei Projekte durchgeführt, für die ich intaraktive Visualisierungen in Python implementiert habe:

1. Analyse der ausländischen Besucher in Estland: Auf der Grundlage passiver mobiler Positionsbestimmung entwarf ich eine

interaktive Karte mit Plotly Scattermapbox, die einen Einblick in die Bewegungen und Besuchsmuster ausländischer Besucher in

Estland bietet. Der Clou an diesem Projekt war, dass die Länder aus Gründen des Datenschutzes mit Namen von Wildtieren

dargestellt wurden.

2. Analyse von Touristenreisen auf der Grundlage von GPS-Daten: Anhand von Daten aus der Android-App "MobilityLog" analysierte

ich die Bewegungsmuster eines Touristen in Kalifornien. Die daraus resultierende Karte zeigte die Reiseroute und wies auf

Übernachtungen, Essensstopps und mehr hin. Die Verarbeitung war aufgrund von Unstimmigkeiten im Datensatz nicht ganz

einfach, aber die Überwindung dieser Probleme machte die Ergebnisse umso lohnender.

Meine gesamte analytische Arbeit und die daraus resultierenden Visualisierungen werden auf dieser Seite vorgestellt. Schauen Sie sich die interaktiven Karten an und vertiefen Sie sich in die Muster, die Dichte und die einzigartigen Nutzererfahrungen aus beiden Projekten.

Link to my code on github: https://github.com/Leonieen/MLBS

Python

Keywords:

Remote Sensing

Machine Learning

Sentinel 2

Urban Green Spaces

Idea

In order to implement the project as efficiently and resource-efficiently as possible in relation to computing capacities, the procedure was divided into 2 levels.

In the project, we are starting at level 1, at city level or perimeter: Sentinel-2 satellite scenes serve as the data basis here. These are sufficiently large and high-resolution to cover entire urban areas and their surroundings, and they provide additional spectral bands that are beneficial for the detection of green spaces.

At level 1, the task is simple - it is a classification to determine whether an area is an urban green space or not. This uses Sentinel-2 imagery in conjunction with the Copernicus Corine Land Cover dataset. This land cover dataset is categorised into three classes: non-green, green and building. Areas of suitable size that could potentially be categorised as urban green spaces (UGS) are then filtered out.

At level 2, the green spaces identified in level 1 are analysed in more detail using higher resolution images to determine whether an area is a park or a cemetery based on distinguishing features. Here we take the boundary lines of the green areas identified in stage 1 and pass them to the GeoSAM Python module, which crops these sections of the map for download. These images serve as training data for the classification, using OpenStreetMap (OSM) data with specific tags such as leisure:park, amenity:funeral_hall, landuse:cemetery and building:true, among others.

This data is further refined in the GIS and adapted to the survey areas as required. For example, gravestones are precisely detailed using GeoSAM's bounding box segmentation to determine the exact area of each stone and exported as georeferenced vector files. As a second method of comparison for the headstones, entire rows of headstones are simply classified as polygons in order to visualise them at a larger scale than individually.

Both Level 1 and Level 2 classification use the XGBoost algorithm, which is known for its effectiveness and efficiency. In Level 2, the identification of a park or cemetery is based on the relationship between certain features (such as headstones, footpaths and green spaces) and the rest. This method can be used to determine whether there are enough characteristic elements to make a reliable classification.

Level 1

Level 1 involved a multi-stage process to classify urban green spaces. Here is an overview of how the task was approached:

Initial trials:

Loading training data as vector data into a Jupyter notebook and converting it into a DataFrame. This allowed us to use the data as labels (y) for our model. At this stage, we kept all the classes from the original dataset.

For more details: classification_landcover.ipynb.

Extension of the Classification_Augsburg_Sentinel Notebook:

Input: Sentinel 2 scene used for Augsburg, from summer 2022 without clouds

Compositing bands: This involved creating a composite image from the bands provided by Sentinel 2.

Training data input: We used the Urban Atlas Land Cover data for Augsburg as the training dataset.

Data generation from shapefiles: It was important to tailor these to the Sentinel images to ensure that the training data did not extend beyond the boundaries of the image. Each class was limited to a maximum number of features to avoid overloading, and features that did not contain a complete set of pixels or were outside the target area were discarded.

Band intensity overview: the intensity of the features in the different bands was analysed, which could be important for fine-tuning later.

Classifier training: The classifier was first trained with GaussianNB.

Prediction with the sentinel scene: Focused only on the urban area, and considering all available bands.

Results: Using all land cover classes proved to be of little help; most were misclassified as airports or railway lines.

Decision making: It was determined which classes should be retained and how they should be weighted, which was crucial for the development of the ontology.

Additional predictions with different models: Predictions were made using RandomForest, KMeans, Kneighbors and DecisionTree models.

The result: The results were inadequate because the models could not cope with the numerous classes.

Refined experiments with a simplified classification scheme: Despite simplifying the classifications into green/non-green, the results with the above models were still unsatisfactory and computationally intensive.

Further simplification with only three bandwidths (8, 4, 3): Again, no better results were obtained, emphasising the need for a different approach.

Final Approach for Level 1:

Switched to a Gradient Boosting model due to poor outcomes and excessive waiting times for results.

Refined the classes into Non-Green, Green, and Buildings, and also entirely discarding "Airports" and "Railways" that previously interfered with the predictions.

Used the Urban Atlas Land Cover data for Augsburg, defining the new classes.

Rasterized the data in ArcGIS Pro and split the raster into training and validation sets in a 2/3 ratio.

Created a composite from Sentinel 2 bands (2-8A) and calculated additional indices to serve as extra features for classification.

Model Training and Validation:

Converted the digital number values from Sentinel data to reflectance values, accounting for infinite values to avoid errors.

A new raster stack was created, and Coordinate Reference System (CRS) settings were aligned with one of the bands.

Ensured the training and validation sets matched the spatial scope of the data set.

These raster datasets were then converted to DataFrames, and their CRS matched that of Sentinel's.

The XGBClassifier was trained with y as labels from the class column and X as all other values (the band values).

Post-Prediction Processing:

After ensuring all rasters had the same shape for prediction, the target image was trained and predicted with the model.

A morphological operator was applied to the prediction raster. Considering the small and disconnected nature of the UGS of interest, the smallest possible structural element was used to capture everything. However, it might be worth considering a larger element to filter out larger areas.

Final Extraction and Analysis:

The focus was solely on the green areas, which we then extracted and saved as, for example, a Shapefile. This was possible through GeoSAM’s raster_to_vector() function.

To gain insights into the model's decisions, also the SHAP values have been analyzed.

This meticulous process allowed us to discern between non-green, green, and building areas within the urban fabric, and refine our model to a state where it offered a reasonable balance of accuracy and efficiency.

Level 2

Level 2 delves into the classification of more refined urban green space features using training data sourced from OpenStreetMap (OSM). Categories such as playgrounds, parks, benches, footways, memorials, cemeteries, grass, recreation grounds, and buildings are utilized. These categories are then consolidated into a class column and exported for further processing. Bench and footway features are buffered to appropriate scales and stored in a geopackage.

For the detailed identification of graves, GeoSAM is employed. Each gravestone is encircled with a bounding box, allowing GeoSAM to detect the stone within that box. The resulting raster is then vectorized, exported, and joined with the OSM dataset in a GIS software, ensuring no overlapping occurs, which is critical for the subsequent rasterization process.

The imagery utilized for this level is defined by a bounding box and downloaded. These are typically Google satellite photos that have been corrected; the specifics of which can be found in the provided notebook, box_prompts. Initially, the classification process follows a similar path to that of the Sentinel data: vector datasets are loaded, and rasters are cut to match the size of vector/label data.

Early training attempts utilized the previously ineffective models applied to the Sentinel data, with the same assembly of data for predictions, now on RGB imagery. It was noted that these models did not perform well due to the resolution differences when applied to Sentinel data.

The final procedure for Level 2 mirrored that of Level 1, involving the rasterization of training data in a GIS software, dividing the training and validation set by a 2/3 ratio, and making slight adjustments to the hyperparameters of the XGBoost model.

The objective was to determine the presence of a cemetery by the percentage of the image that included cemetery features like paths and gravestones. Here, the challenge was to ascertain what percentage constitutes a significant presence of cemetery characteristics. This task was further complicated by the misclassification of many graves as paths, leading to an experiment where all cemetery features were combined into one class. However, this approach yielded worse results.

The grave shapes generated by GeoSAM did not perform well, prompting a shift to larger polygons representing rows of graves rather than individual ones. This required substantial manual corrections to the training data. Large cemetery and park areas from OSM proved to be unhelpful, leading to their removal and the manual addition of features such as grass, trees, and graves before rasterization.

Attempts to define non-relevant classes around the main features, along with specific characteristic classes for cemeteries, were unsuccessful due to their specificity to one training area.

Key points in training data design emerged:

-

Include only classes that are truly relevant for identifying parks or cemeteries.

-

Restrict the number of classes, with around five being optimal. More than seven classes led to deteriorating results.

-

The training set must generate a class 0 for 'no information', essential for distinguishing the areas outside of parks or cemeteries. Dropping these values, as tried in the Göggingen predictions, negatively impacted the results.

-

Balancing the dataset is critical, as too many class 0 features can skew the model and extend training times. A potential solution is to randomly reduce the number of class 0 features to match the size of the most populous feature class.

In conclusion, it may be more effective to train binary models for each characteristic feature, as models attempting to identify multiple classes simultaneously tended to yield poorer and noisier results.

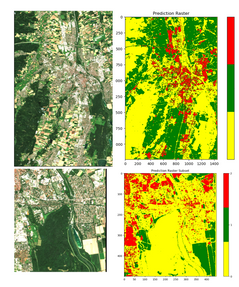

Results

In summary, the project aimed at detecting urban green spaces (UGS) unfolded across two levels of complexity, with a significant emphasis on the use of machine learning techniques for classification based on non-deep learning methods.

Level 1 laid the groundwork by identifying potential UGS through Sentinel 2 imagery and the Copernicus Corine Land Cover dataset. Initial classification attempts using various models such as GaussianNB, RandomForest, and DecisionTree faced challenges, notably due to the complexity of the urban landscape and the multitude of land cover classes. Simplifying the classification to Non-Green, Green, and Buildings, and refining the model to Gradient Boosting, resulted in more accurate predictions and efficient processing. Key vegetation indices and satellite band composites were employed to augment the model's performance.

Level 2 further refined the classification by discerning between parks and cemeteries within the urban green spaces. Training data from OpenStreetMap (OSM) provided detailed categorization, which included features such as playgrounds, benches, footways, and grave sites. The use of GeoSAM for detailed feature identification and vectorization was crucial, although it presented challenges that necessitated manual adjustments to the training data. Attempts to streamline the model's efficiency included reducing the number of feature classes and strategically managing 'no information' values.

The final phase of the project harnessed the XGBoost algorithm, with fine-tuning of hyperparameters to improve prediction accuracy. Despite initial difficulties, the model settled at a point where balanced accuracy and Cohen's Kappa statistics indicated a reliable classification system.

The project highlights the intricate balance between model complexity and predictive accuracy. Binary models for individual features emerged as a potential avenue for more precise classification, suggesting a future direction for continued research and development in the field of urban green space detection. The findings and methodologies established in this project provide a substantial contribution to the understanding and management of urban green spaces, emphasizing their critical role in urban planning and the environment.

Discussion

The project's outcomes prompt a discussion on the viability and challenges of machine learning methods in the classification of urban green spaces. While significant progress was made, the results also highlight areas for potential enhancement and future exploration.

One of the key takeaways is the need for high-quality, detailed training data. The difficulty in classifying complex urban features such as parks and cemeteries underscores the importance of precision in training datasets. The manual adjustments needed due to misclassifications of features like graves as pathways indicate that automated systems can still benefit from human oversight and local knowledge, especially when dealing with nuanced urban features.

The simplification of feature classes improved model performance, suggesting that too many classes can confuse the model, leading to "noisy" data. However, this raises the question of how to balance the need for detailed classification with the need to maintain a manageable number of classes for the model. Future work could explore more sophisticated methods of feature selection and reduction to optimize model performance without oversimplifying the urban landscape.

The challenges encountered with non-deep learning methods, particularly concerning resolution and feature distinction, suggest that further research could compare these results with those obtained using deep learning techniques. This could potentially validate the chosen approach or provide insight into whether the benefits of reproducibility and model simplicity ultimately outweigh the enhanced capabilities of deep learning models.

Another problem was the resolution of the images and the small-scale structure of unique features of cemeteries and parks. For example, benches were not visible and therefore could not be identified. It was also very difficult to find any features at all. A successful classification based on these alone is rather unlikely and more attention should be paid in future to the spatial relationships of these and possibly shapes and proportions. But this is also very challenging, as hardly any two parks are the same. Cemeteries can also take on different forms. With cemeteries in particular, it was also noticeable that some had a lot of green space while others appeared very grey, which again raises the question: is every cemetery also an UGS?

Finally, future work could include the development of a more dynamic model that adjusts its parameters based on the urban context it is applied to, taking into account regional differences in urban green space characteristics. There could also be value in creating a more interactive model that allows urban planners and environmental scientists to input local knowledge and feedback to refine the classification in real time.